AI-Infused Applications: The Rise of Vector and Graph Databases

Vector Embeddings

cat=[1.5,−0.4,7.2,19.6,3.1]

How vector embeddings are generated?

💡 Usually in Retrieval Augmented Generation (RAG) applications, it doesn't make much sense to convert an entire coprus of text or individual words to vector embeddings. Instead large corpus (exceeding 42,000 character) of text get broken down into manageable chunks of text so that a similarity search can be performed among the chunks.

Searching/Querying in a vector DB

Relevance

Piecing it all together

Answer the following user query by only using the information provided below:

- {{ text chunks }}

User query is: {{ query }}

Knowledge graphs

At this point you can jump into creating a RAG pipeline right away and the LLM would have adequate information to answer straight factual questions.

Use cases for RAG

- Chat bot answering questions about refund policy

- Customer support for product information

- Simple FAQ systems

However a mere similarity search would not yield some of the intricate details that may be crucial in providing an accurate answer.

Consider following story:

- John was happily married to Katie for ten years

- Katie is a childhood friend of Martha

- Martha is Jake’s wife

- Jake and Trevor are best friends

- Trevor had a fight with John

- After Jake learned about this fight, he started hating Trevor

If this information was spread across a 200-page book that has been vectorized by paragraphs, a similarity search for “Why Jake dislikes Trevor so much” would likely return these specific lines:

- “Jake and Trevor are best friends”

- “Trevor had a fight with John”

- “Jake learns about the fight and Jake started hating Trevor”

- “Martha is Jake’s wife”

💡 Notice that above results do not reveal the intricate detail of why Jake end friendship with Trevor other than the fight. “John was happily married to Katie for ten years” and “Martha and Katies friendship goes back to childhood” Indirectly ties Katie/John to Jake’s social circle, potentially explaining why Jake cares about the fight. This is where a graph database can help.

How a Graph Database Helps

Nodes: Jake, Martha, Trevor, John, Katie

Edges:

Jake --MARRIED_TO--> MarthaMartha --FRIEND_OF--> KatieKatie --MARRIED_TO--> JohnTrevor --HAD_FIGHT_WITH--> JohnJake --FRIENDS_OF--> Trevor

This structure visualizes chains of loyalty:

Jake → Martha → Katie → John vs. Jake → Trevor

The fight between Trevor and John disrupts this web, forcing Jake to “choose sides.”

Building a knowledge graph

Graph retrieval

Here's a graph query:

{

people_search(func: eq(entity, "John Doe")) {

entity

entity_type

relation

object

object_type

}

}and an example output:

{

"data": {

"people_search": [

{

"entity": "John Doe",

"entity_type": "Person",

"relation": "OCCUPATION",

"object": "Businessman",

"object_type": "Occupation"

},

{

"entity": "John Doe",

"entity_type": "Person",

"relation": "DEATH_CAUSE",

"object": "Murder",

"object_type": "CauseOfDeath"

}

]

}

}Similar to what we do to the results of vector embeddings we can embed the query output into the final prompt so that the LLM will have much more details pertaining to the given user question. We may need to limit the depth of the query to filter out unwanted results which however will take some trial and error to when to use what depth.

Conclusion

In this guide, we’ve explored the world of vector databases and their applications in text retrieval. We’ve seen how vector embeddings can be used to search for relevant text segments, and how the results can be further augmented with contextual information using Large Language Models (LLMs).

We’ve also delved into the realm of graph databases, which excel at capturing complex relationships between entities. By modeling these relationships as nodes and edges, we can create a powerful knowledge graph that can help us answer nuanced questions.

The key takeaways from this guide are:

- Vector databases offer a powerful way to search for relevant text segments

- LLMs can be used to augment the context with additional information

- Graph databases provide a powerful way to capture complex relationships between entities

- Knowledge graphs can help us answer nuanced questions by modeling these relationships as nodes and edges

To build a comprehensive knowledge graph, we’ll need to:

- Extract key entities and relationships from user prompts using NLP models

- Model these relationships as nodes and edges in a graph database

- Add full-text indices on entity names for efficient text search

- Use LLMs to augment the context with additional information

Content under the appendix is only meant for my reference for future post. If you find my guide useful please do share it :)

Appendix

Graph databases

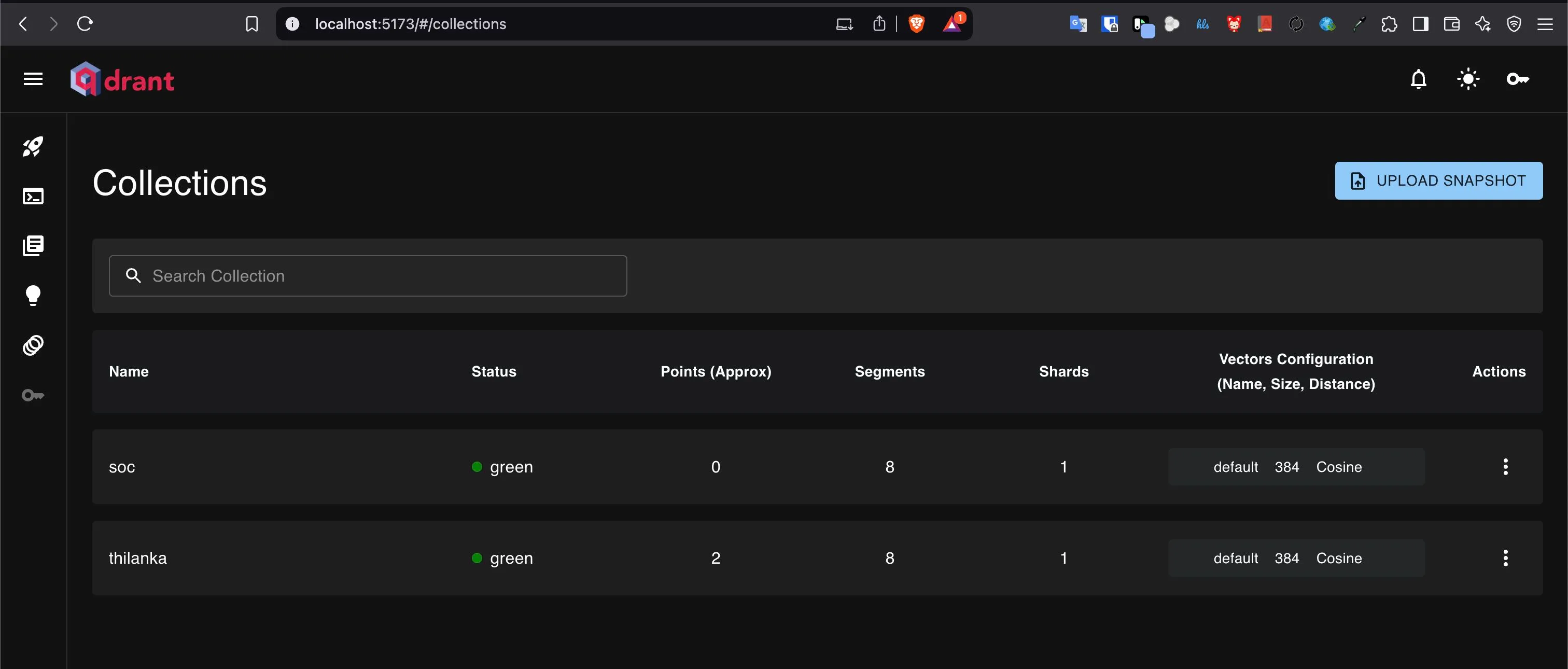

Qdrant - a vector database

Qdrant is a relatively new addition to vector database space. What I like the most about Qdrant is how easy it is to get started development using it. You just download the platform binary here and launch 🚀

ulimit -n 10000

./qdrantI had to set the ulimit to avoid getting error: Too many open files 👀

Getting the frontend working

Although in official docs say that Qdrant’s web ui client can be accessed through http://localhost:6333/dashboard it doesn’t work out of the box at the time of writing. You have to clone their web gui repo and run npm:

git clone https://github.com/qdrant/qdrant-web-ui

cd qdrant-web-ui

npm i

npm startNow you should be able to access it through http://localhost:5173 (you need to run qdrant in a separate terminal :duh 🙄 )